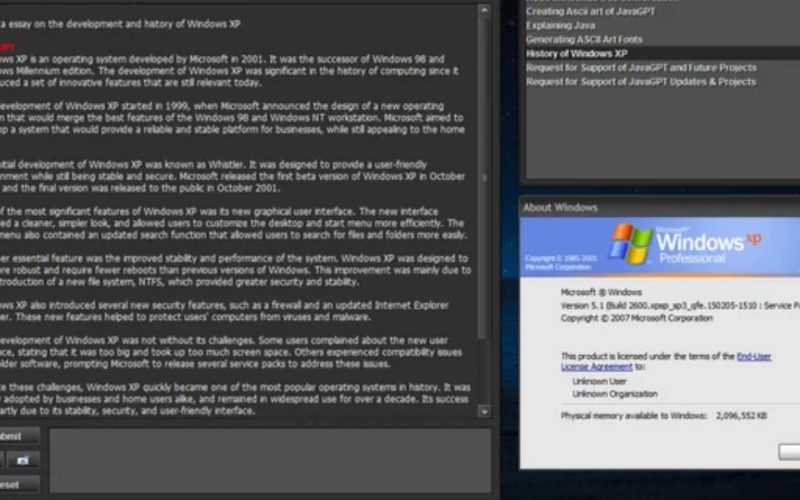

EXO Labs wrote a detailed blog post about running Llama on Windows 98 and showed a powerful AI large language model (LLM) running on a 26-year-old Windows 98 Pentium II PC in a short video on social media. The video shows an ancient Elonex Pentium II @ 350 MHz booting into Windows 98, after which EXO launches its proprietary pure C inference engine based on Andrej Karpathy’s Llama2.c and instructs the LLM to generate a story about Sleepy Joe. Surprisingly, it works, with the story developing at a fair speed.

The aforementioned impressive feat is far from the conclusion of EXO Labs’ journey. This somewhat enigmatic company emerged from the shadows in September with an aim “to democratize access to AI.” The organization was founded by a team of Oxford University researchers and engineers.

In a nutshell, EXO believes that a small number of megacorps controlling AI is extremely detrimental to culture, truth, and other essential parts of our society. Hence the goal of EXO: “Build open infrastructure to train frontier models and enable any human to run them anywhere.” In this approach, ordinary people may train and execute AI models on nearly any device, and this insane Windows 98 AI accomplishment is a totemic example of what can be accomplished with (very) low resources.

Because the Tweet video is relatively brief, we were grateful to discover EXO’s blog post about running Llama on Windows 98. This is Day 4 of “the 12 days of EXO” (so stay tuned).

As readers may think, it was simple for EXO to purchase an old Windows 98 PC from eBay as the project’s base, but there were other challenges to overcome. EXO explains that transferring data onto the old Elonex-branded Pentium II was difficult, so they resorted to using “good old FTP” for file uploads via the machine’s Ethernet interface.

Compiling current code for Windows 98 was likely a bigger difficulty. EXO was pleased to discover Andrej Karpathy’s llama2.c, which can be summarized as “700 lines of pure C that can run inference on models with the Llama 2 architecture.” With this resource with the old Borland C++ 5.02 IDE and compiler (plus a few minor tweaks), the code could be converted into a Windows 98 executable and launched. Here is a GitHub link to the completed code.

Alex Cheema, one of the excellent men behind EXO, expressed gratitude to Andrej Karpathy for his work, marveling at its performance, providing “35.9 tok/sec on Windows 98” using a 260K LLM with Llama architecture. It is worth noting that Karpathy formerly worked as a director of AI at Tesla and was a member of the OpenAI founding team.

Of course, a 260K LLM is small, but it ran very quickly on an ancient 350 MHz single-core PC. According to the EXO blog, moving up to a 15M LLM resulted in a generation rate of somewhat more than 1 tok/sec. However, Llama 3.2 1B was glacially sluggish, clocking in at 0.0093 tok/sec.

BitNet is the larger plan

You should know by now that this story is about more than just getting an LLM to run on a Windows 98 computer. EXO concludes its blog post by discussing the future, which it expects will be democratized thanks to BitNet.

“BitNet is a transformer architecture that uses ternary weights,” according to the article. Importantly, using this architecture, a 7B parameter model requires only 1.38GB of storage. That may still make a 26-year-old Pentium II moan, but it’s nothing compared to modern gear or even decade-old devices.

EXO further emphasizes that BitNet is CPU-first, avoiding costly GPU requirements. Furthermore, this form of model is said to be 50% more efficient than full-precision models, and it can run a 100B parameter model on a single CPU at human reading speeds (5 to 7 tok/sec).

Before we go, please be aware that EXO is still asking for assistance. If you wish to avoid the future of AI being trapped within gigantic data centers owned by billionaires and megacorps and believe you can help in some manner, you could contact us.

For a more casual interaction with EXO Labs, they maintain a Discord Retro channel where they discuss running LLMs on ancient gear like as Macs, Gameboys, Raspberry Pis, and more.

Source: AI language model runs on a Windows 98 system with Pentium II and 128MB RAM